In an age where everything is already documented, archived, and geo-tagged, Seeing Sound, Hearing Cities doesn’t aim to record the city—it rewires how we perceive it. This isn’t ambient noise with a poetic filter. This is raw data, stripped of aesthetics, turned into vibration.

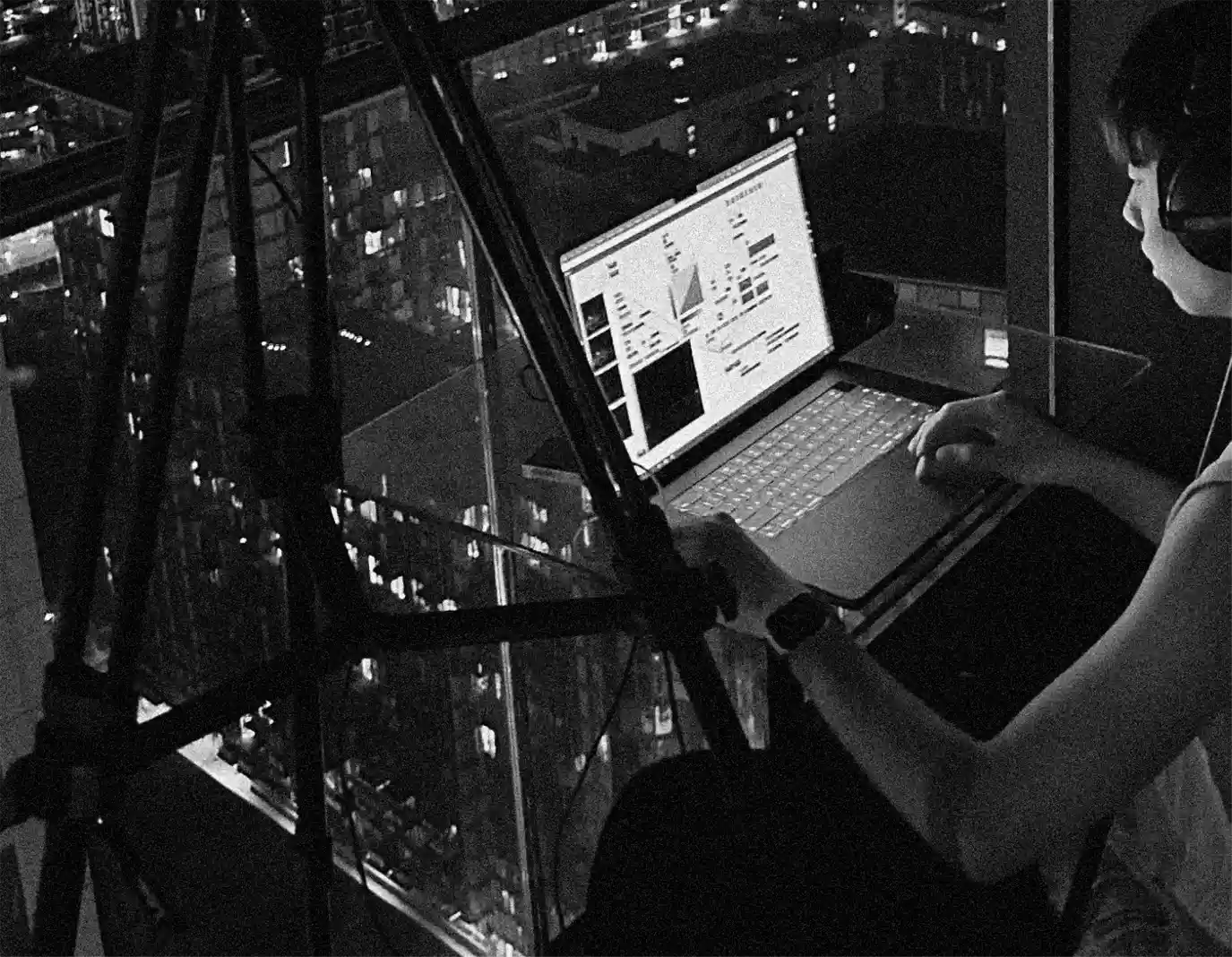

At the heart of the project is a live visual-to-audio pipeline. City lights are captured in real time, frame by frame. A frame difference algorithm isolates only the flickering changes—subtracting light from light—to extract movement. Grayscale conversion simplifies the visual noise. The result? A matrix of flashes, each pixel a pulse. These are sonified into sine waves, not for beauty, but for purity. No chords, no melody, no human bias. Just frequency, rhythm, and the anatomy of urban light rendered audible.

The system is built in modular environments—image processing algorithms flow into brightness/contrast adjustments, which then control sound oscillators. The city becomes an accidental composer, each blink a note, each skyline an evolving score. You don’t choose the music. The lights write it for you.

It’s an artificial synesthesia machine. A camera as ear. A synthesizer as eye. Through it, the silent city becomes a restless, whispering organism. And once you’ve heard it, it’s impossible to un-hear.

Credit and Special Thanks

Danhua Zhu

Huiqun Xu